This is a series!🔗

Part one: You're here!

Part two: Stable Diffusion Updates

(Want just the bare tl;dr bones? Go read this Gist by harishanand95. It says everything this does, but for a more experienced audience.)

Stable Diffusion has recently taken the techier (and art-techier) parts of the internet by storm. It's an open-source machine learning model capable of taking in a text prompt, and (with enough effort) generating some genuinely incredible output.

See the cover image for this article? That was generated by a version of Stable Diffusion trained on lots and lots of My Little Pony art. The prompt I used for that image was kirin, pony, sumi-e, painting, traditional, ink on canvas, trending on artstation, high quality, art by sesshu.

Unfortunately, in its current state, it relies on Nvidia's CUDA framework, which means that it only works out of the box if you've got an Nvidia GPU.

Fear not, however. Because Stable Diffusion is both a) open source and b) good, it has seen an absolute flurry of activity, and some enterprising folks have done the legwork to make it usable for AMD GPUs, even for Windows users.

Requirements🔗

Before you get started, you'll need the following:

- A reasonably powerful AMD GPU with at least 6GB of video memory. I'm using an AMD Radeon RX 5700 XT, with 8GB, which is just barely powerful enough to outdo running this on my CPU.

- A working Python installation. You'll need at least version 3.7. v3.7, v3.8, v.39, and v3.10 should all work.

- The fortitude to download around 6 gigabytes of machine learning model data.

- A Hugging Face account. Go on, go sign up for one, it's free.

- A working installation of Git, because the Hugging Face login process stores its credentials there, for some reason.

The Process🔗

I'll assume you have no, or little, experience in Python. My only assumption is that you have it installed, and that when you run python --version and pip --version from a command line, they respond appropriately.

Preparing the workspace🔗

Before you begin, create a new folder somewhere. I named mine stable-diffusion. The name doesn't matter.

Once created, open a command line in your favorite shell (I'm a PowerShell fan myself) and navigate to your new folder. We're going to create a virtual environment to install some packages into.

When there, run the following:

python -m venv ./virtualenv

This will use the venv package to create a virtual environment named virtualenv. Now, you need to activate it. Run the following:

# For PowerShell

./virtualenv/Scripts/Activate.ps1

rem For cmd.exe

virtualenv\Scripts\activate.bat

Now, anything you install via pip or run via python will only be installed or run in the context of this environment we've named virtualenv. If you want to leave it, you can just run deactivate at any time.

Okay. All set up, let's start installing the things we need.

Installing Dependencies🔗

We need a few Python packages, so we'll use pip to install them into the virtual envrionment, like so:

pip install diffusers==0.3.0

pip install transformers

pip install onnxruntime

Now, we need to go and download a build of Microsoft's DirectML Onnx runtime. Unfortunately, at the time of writing, none of their stable packages are up-to-date enough to do what we need. So instead, we need to either a) compile from source or b) use one of their precompiled nightly packages.

Because the toolchain to build the runtime is a bit more involved than this guide assumes, we'll go with option b).

Head over to https://aiinfra.visualstudio.com/PublicPackages/_artifacts/feed/ORT-Nightly/PyPI/ort-nightly-directml/overview/1.13.0.dev20220908001

(Or, if you're the suspicious sort, you could go to https://aiinfra.visualstudio.com/PublicPackages/_artifacts/feed/ORT-Nightly and grab the latest under ort-nightly-directml yourself).

Either way, download the package that corresponds to your installed Python version: ort_nightly_directml-1.13.0.dev20220913011-cp37-cp37m-win_amd64.whl for Python 3.7, ort_nightly_directml-1.13.0.dev20220913011-cp38-cp38-win_amd64.whl for Python 3.8, you get the idea.

Once it's downloaded, use pip to install it.

pip install pathToYourDownloadedFile/ort_nightly_whatever_version_you_got.whl --force-reinstall

Take note of that --force-reinstall flag! The package will override some previously-installed dependencies, but if you don't allow it to do so, things won't work further down the line. Ask me how I know >.>

Getting and Converting the Stable Diffusion Model🔗

First thing, we're going to download a little utility script that will automatically download the Stable Diffusion model, convert it to Onnx format, and put it somewhere useful. Go ahead and download https://raw.githubusercontent.com/huggingface/diffusers/main/scripts/convert_stable_diffusion_checkpoint_to_onnx.py (i.e. copy the contents, place them into a text file, and save it as convert_stable_diffusion_checkpoint_to_onnx.py) and place it next to your virtualenv folder.

Now is when that Hugging Face account comes into play. The Stable Diffusion model is hosted here, and you need an API key to download it. Once you sign up, you can find your API key by going to the website, clicking on your profile picture at the top right -> Settings -> Access Tokens.

Once you have your token, authenticate your shell with it by running the following:

huggingface-cli.exe login

And paste in your token when prompted.

Note: If you can get an error with a stack trace that looks something like this at the bottom:

File "C:\Python310\lib\subprocess.py", line 1438, in _execute_child

hp, ht, pid, tid = _winapi.CreateProcess(executable, args,

FileNotFoundError: [WinError 2] The system cannot find the file specified

...then that probably means that you don't have Git installed. The huggingface-cli tool uses Git to store login credentials.

Once that's done, we can run the utility script.

python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

--model_path is the path on Hugging Face to go and find the model. --output_path is the path on your local filesystem to place the now-Onnx'ed model into.

Sit back and relax--this is where that 6GB download comes into play. Depending on your connection speed, this may take some time.

...done? Good. Now, you should have a folder named stable_diffusion_onnx which contains an Onnx-ified version of the Stable Diffusion model.

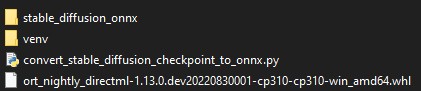

Your folder structure should now look something like this:

(I named my virtual environment

(I named my virtual environment venv instead of virtualenv. Same same though.)

Almost there.

Running Stable Diffusion🔗

Now, you just have to write a tiny bit of Python code. Let's create a new file, and call it text2img.py. Inside of it, write the following:

from diffusers import StableDiffusionOnnxPipeline

pipe = StableDiffusionOnnxPipeline.from_pretrained("./stable_diffusion_onnx", provider="DmlExecutionProvider")

prompt = "A happy celebrating robot on a mountaintop, happy, landscape, dramatic lighting, art by artgerm greg rutkowski alphonse mucha, 4k uhd'"

image = pipe(prompt).images[0]

image.save("output.png")

Take note of the first argument we pass to StableDiffusionOnnxPipeline.from_pretrained(). "./stable_diffusion_onnx". That's a file path to the Onnx-ified model we just created.

And provider needs to be "DmlExecutionProvider" in order to actually instruct Stable Diffusion to use DirectML, instead of the CPU.

Once that's saved, you can run it with python .\text2img.py.

Once it's done, you'll have an image named output.png that's hopefully close to what you asked for in prompt!

Bells and Whistles🔗

Now, that was a little bit bare-minimum, particularly if you want to customize more than just your prompt. I've written a small script with a bit more customization, and a few notes to myself that I imagine some folks might find helpful. It looks like this:

from diffusers import StableDiffusionOnnxPipeline

import numpy as np

def get_latents_from_seed(seed: int, width: int, height:int) -> np.ndarray:

# 1 is batch size

latents_shape = (1, 4, height // 8, width // 8)

# Gotta use numpy instead of torch, because torch's randn() doesn't support DML

rng = np.random.default_rng(seed)

image_latents = rng.standard_normal(latents_shape).astype(np.float32)

return image_latents

pipe = StableDiffusionOnnxPipeline.from_pretrained("./stable_diffusion_onnx", provider="DmlExecutionProvider")

"""

prompt: Union[str, List[str]],

height: Optional[int] = 512,

width: Optional[int] = 512,

num_inference_steps: Optional[int] = 50,

guidance_scale: Optional[float] = 7.5, # This is also sometimes called the CFG value

eta: Optional[float] = 0.0,

latents: Optional[np.ndarray] = None,

output_type: Optional[str] = "pil",

"""

seed = 50033

# Generate our own latents so that we can provide a seed.

latents = get_latents_from_seed(seed, 512, 512)

prompt = "A happy celebrating robot on a mountaintop, happy, landscape, dramatic lighting, art by artgerm greg rutkowski alphonse mucha, 4k uhd"

image = pipe(prompt, num_inference_steps=25, guidance_scale=13, latents=latents).images[0]

image.save("output.png")

With this script, I can pass in an arbitrary seed value, easily customize the height and width, and in the triple-quote comments, I've added some notes about what arguments the pipe() function takes.

My plan is to wrap all of this up into an argument parser, so that I can just pass all of these parameters into the script without having to modify the source file itself,

but I'll do that later.

Some Final Notes🔗

- As far as I can tell, this is still a fair bit slower than running things on Nvidia hardware! I don't have any hard numbers to share, only anecdotal observations that this seems to be anywhere from 3x to 8x slower than it is for people on similar-specced Nvidia hardware.

- Currently, the Onnx pipeline doesn't support batching, so don't try to pass it multiple prompts, or it will be sad.

- All of this is changing at breakneck pace, so I fully expect about half of this blog post to be outdated a few weeks from now. Expect to have to do some legwork of your own. Sorry!

- There is a very good guide on how to use Stable Diffusion on Reddit that goes through the basics of what each of the parameters means, how it affects the output, and gives tips on what you can do to get better ouputs.

Closing Thoughts🔗

So hopefully, now you've got your AMD Windows machine generating some AI-powered images. As I said before, I expct much of this information to be out of date two weeks from now. I might try to keep this post updated if I find the time and inclination, but that depends a lot on how this develops, and my own free time. We'll see!

As ever, I can be found on GitHub as pingzing and Twitter as @pingzingy. Happy generating!

The text of this blog post is licensed under a Creative Commons Attribution 4.0 International License.

The text of this blog post is licensed under a Creative Commons Attribution 4.0 International License.

Stephen

Thu, Sep 15, 2022, 01:07:07File "C:\stable-diffusion\stable-diffusion\text2img.py", line 12, in

pipe = StableDiffusionOnnxPipeline.from_pretrained("./stable_diffusion_onnx", provider="DmlExecutionProvider")

RuntimeError: D:\a\_work\1\s\onnxruntime\core\providers\dml\dml_provider_factory.cc(124)\onnxruntime_pybind11_state.pyd!00007FFF3E877BF3: (caller: 00007FFF3E7C9C16) Exception(1) tid(d50) 80070057 The parameter is incorrect.

MK

Thu, Sep 15, 2022, 01:09:08Traceback (most recent call last):

File "C:\Program Files\WindowsApps\PythonSoftwareFoundation.Python.3.8_3.8.2800.0_x64__qbz5n2kfra8p0\lib\runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File "C:\Program Files\WindowsApps\PythonSoftwareFoundation.Python.3.8_3.8.2800.0_x64__qbz5n2kfra8p0\lib\runpy.py", line 87, in _run_code

exec(code, run_globals)

File "I:\stable-diffusion\virtualenv\Scripts\huggingface-cli.exe\__main__.py", line 7, in

File "i:\stable-diffusion\virtualenv\lib\site-packages\huggingface_hub\commands\huggingface_cli.py", line 41, in main

service.run()

File "i:\stable-diffusion\virtualenv\lib\site-packages\huggingface_hub\commands\user.py", line 176, in run

_login(self._api, token=token)

File "i:\stable-diffusion\virtualenv\lib\site-packages\huggingface_hub\commands\user.py", line 344, in _login

hf_api.set_access_token(token)

File "i:\stable-diffusion\virtualenv\lib\site-packages\huggingface_hub\hf_api.py", line 705, in set_access_token

write_to_credential_store(USERNAME_PLACEHOLDER, access_token)

File "i:\stable-diffusion\virtualenv\lib\site-packages\huggingface_hub\hf_api.py", line 528, in write_to_credential_store

with subprocess.Popen(

File "C:\Program Files\WindowsApps\PythonSoftwareFoundation.Python.3.8_3.8.2800.0_x64__qbz5n2kfra8p0\lib\subprocess.py", line 858, in __init__

self._execute_child(args, executable, preexec_fn, close_fds,

File "C:\Program Files\WindowsApps\PythonSoftwareFoundation.Python.3.8_3.8.2800.0_x64__qbz5n2kfra8p0\lib\subprocess.py", line 1311, in _execute_child

hp, ht, pid, tid = _winapi.CreateProcess(executable, args,

FileNotFoundError: [WinError 2] The system cannot find the file specified

(virtualenv) PS I:\stable-diffusion>

Neil

Thu, Sep 15, 2022, 08:27:18Stephen: Yours is trickier. It looks like it's actually dying somewhere in DirectML's native C/C++ code itself, with a classically unhelpful "The parameter is incorrect." =/

Without more information about your setup, it's hard to say. I can say that I haven't seen that before, though. Are you running on an unusual system, like an ARM version of Windows, or something?

ponut64

Thu, Sep 15, 2022, 11:48:00Note: Probably do not use a newer nightly version of DirectML. It may cause huggingface to misbehave. Or something.

CPU is AMD R5 5600G.

GPU is AMD RX 6600 (non-XT).

CPU time for a sample 256x256 image and prompt is 54 seconds.

GPU time for the same prompt and size is 34 seconds.

It helps!

As for the images it's producing, they all seem rather cursed. But such is the way of AI!

Conor

Thu, Sep 15, 2022, 12:15:37Though I'm afraid it still seems to be throwing an error at me and I don't understand where i'm missing a step.

The error i get is as follows:

PS F:\Applications\AI\Stable-Diffusion> huggingface-cli.exe login

huggingface-cli.exe : The term 'huggingface-cli.exe' is not recognized as the name of a cmdlet, function, script file, or operable program. Check the spelling of the name, or if a path was included, verify

that the path is correct and try again.

At line:1 char:1

+ huggingface-cli.exe login

+ ~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : ObjectNotFound: (huggingface-cli.exe:String) [], CommandNotFoundException

+ FullyQualifiedErrorId : CommandNotFoundException

If anyone can see what I'm doing wrong or has had the same issue I'd love to know!

Neil

Thu, Sep 15, 2022, 15:59:20My guess would be that you didn't activate the virtual environment with .\virtualenv\Scripts\Activate.ps1. If you HAVE, you can probably just invoke it manually with .\virtualenv\Scripts\huggingface-cli.exe.

A good man

Thu, Sep 15, 2022, 16:24:45-you need GIT or you won't be able to log into huggingface. You don't mention this I believe

- '---force-reinstall' has an extra -. Needs removing or command won't be recognized

-downloading the utility script may be confusing for newbies (was for me). Guessing what you meant by 'downloading' is selecting it all and copy-pasting into a notepad file and then changing to .py format. At least that's what worked for me

Conor

Thu, Sep 15, 2022, 18:08:57Completely uninstalling Python, then reinstalling using the installer from the website, and checking the box to add to PATH during the install fixed my problem.

Andrew

Fri, Sep 16, 2022, 01:23:52I cannot try generating a different image size (e.g. 256x256) since I get the following error:

ValueError: Unexpected latents shape, got (1, 4, 32, 32), expected (1, 4, 64, 64)

ponut64

Fri, Sep 16, 2022, 02:52:10Try this line

image = pipe(prompt, height=320, width=320, guidance_scale=8, num_inference_steps=25).images[0]

You can adjust the height, steps, and such from here, and many other parameters that not even I know about.

Neil

Fri, Sep 16, 2022, 07:09:17- Huh, I didn't even realize the CLI used Git as its credential storage medium. Whack. Thanks for the heads-up.

- Typo fixed, thanks.

- Added a bit of clarification around downloading the script, good point.

-Andrew-

If you're using the second Python script in the post, like ponut64 pointed out, you need to pass height and width arguments to pipe() as well as get_latents_from_seed(). (The only thing get_latents_from_seed() does is generate the randomness the image generation process uses, which for Reasons needs to know what the dimensions of the output will be.)

I'll probably put up a small follow-up post soon with an updated script and a few observations about the process. I've got a nicely cleaned-up script that just reads in arguments now, and is a bit neater.

Rev Hellfire

Fri, Sep 16, 2022, 13:27:56One small typo though, "---force-reinstall" should be "--force-reinstall"

A good man

Fri, Sep 16, 2022, 14:13:49This is very annoying as often I don't even try anything explicit nsfw. It just happens at random when u go by different art styles.

Neil

Fri, Sep 16, 2022, 14:16:37Thanks for the heads-up! Fixed!

-A good man-

Yep, that's the safety checker. I'm going to address that in the follow-up post (because for some reason, its also seems to slow things down greatly), but the easiest way to disable it is, after defining pipe, add the following line:

pipe.safety_checker = lambda images, **kwargs: (images, [False] * len(images))

That will replace pipe's safety checker with a dummy function that always returns "false" when it checks to see if the generated output would be considered NSFW.

Eric

Fri, Sep 16, 2022, 14:59:14Alex

Fri, Sep 16, 2022, 16:13:34Marz

Fri, Sep 16, 2022, 17:03:37I'm just having an issue I'm not quite sure how to fix.

When running..

python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

I get this error..

C:\Users\tiny\AppData\Local\Programs\Python\Python310\python.exe: can't open file 'E:\\Desktop\\Stable Diffusion\\convert_stable_diffusion_checkpoint_to_onnx.py': [Errno 2] No such file or directory

I've followed the steps word for word so I'm not too sure where I'm messing up. Any help would be great, thank you!

ponut64

Fri, Sep 16, 2022, 17:57:19You need to manually specify a directory on your system for it to put stable-diffusion.

It must be a full directory name, for example, D:\Library\stable-diffusion\stable_diffusion_onnx

Marz

Fri, Sep 16, 2022, 18:19:03I did do that, no matter what I get the same error. I do have it set up properly (as far I know), just didn't copy the exact prompt before. Here's what I have

(virtualenv) PS E:\Desktop\Stable Diffusion> convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="E:\Desktop\Stable Diffusion"

convert_stable_diffusion_checkpoint_to_onnx.py: The term 'convert_stable_diffusion_checkpoint_to_onnx.py' is not recognized as a name of a cmdlet, function, script file, or executable program.

Check the spelling of the name, or if a path was included, verify that the path is correct and try again.

Marz

Fri, Sep 16, 2022, 18:20:27Neil

Fri, Sep 16, 2022, 18:26:06I've played with trying to use the other schedulers, but haven't had any success yet. They usually die somewhere in the middle with arcane errors I don't know enough to debug.

-Marz-

It looks like the 'convert_stable_diffusion_checkpoint_to_onnx.py' script isn't in the 'E:\Desktop\Stable Diffusion' folder, judging by that error message. Try moving it into there, then running the command again?

Eric

Fri, Sep 16, 2022, 18:31:14For that matter, is there a complete list of arguments I can put into "pipe()" somewhere?

Neil

Fri, Sep 16, 2022, 18:36:18The closest thing I've found to a comprehensive list of arguments taken by pipe() is the source code itself: https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/pipeline_stable_diffusion_onnx.py#L45

As for using a different scheduler, 'scheduler' is an arg that can be passed to .from_pretrained(), and the diffusers repo has a few examples here: https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines/stable_diffusion though I haven't had any luck with those, or tweaks thereof.

Tony Topper

Fri, Sep 16, 2022, 23:03:25Also, both DALL-E 2 and Nitecafe offer generating multiple images at the same time. I would love to get this running with that feature.

I installed the nightly via pip install using the info found here: https://aiinfra.visualstudio.com/PublicPackages/_artifacts/feed/ORT-Nightly/connect/pip Though I had to remove that config line from my pip.ini file after installing it or no other pip installs would work.

Also, FWIW, I got an error about "memory pattern" being disabled when I run the Python script you supplied.

Would love to keep up to date with how you improve the Python script.

(P.S. I've also been getting black squares as the output on occasion. Wonder what that is.)

Marz

Fri, Sep 16, 2022, 23:24:05It's most definitely there, that's why I'm stumped. Just gonna save my GPU the trouble and use the browser. Thank you though and take care!

Luxion

Sat, Sep 17, 2022, 02:43:25You forgot to mention that you need to activate the environment before executing the script each time the console is closed - its obvious but maybe not for noobs.

Now we just need the guys at diffusers to work on onnx img2img and inpainting pipelines.

Eric

Sat, Sep 17, 2022, 02:46:32The black squares output is because of the NSFW filter. From another comment above:

"

pipe.safety_checker = lambda images, **kwargs: (images, [False] * len(images))

That will replace pipe's safety checker with a dummy function that always returns "false" when it checks to see if the generated output would be considered NSFW.

"

I'm also getting that memory pattern error, but it doesn't seem to affect anything?

-Neil-

I'm working on the scheduler problem. As near as I can figure, it's something related to an incompatibility with numpy and torch. Torch isn't getting the right type of float from numpy and when I try to cast it, it still doesn't work. That's where I'm investigating lately at least.

ponut64

Sat, Sep 17, 2022, 05:00:32From here:

https://huggingface.co/hakurei/waifu-diffusion/discussions/4

"it can be fixed by setting dtype=np.int64 in pipeline_stable_diffusion_onnx.py line 133:

noise_pred = self.unet(

sample=latent_model_input, timestep=np.array([t], dtype=np.int64), encoder_hidden_states=text_embeddings

)

"

And specifically about the scheduler:

"I see astype(np.int64) in scheduling_pndm.py line 168 but not in other schedulers that's why change to PNDMScheduler can fix it."

So that guy know's what he's talking about, I don't.

Simon

Sat, Sep 17, 2022, 15:20:07Gianluca

Sat, Sep 17, 2022, 19:13:24I was able to use just the CPU so far and now I can finally try with the GPU, instead.

It seems that the GPU is faster in my case.

I have the following system configuration:

- OS: Windows 10 Pro

- MB: ASRock X570 Phantom Gaming 4

- CPU: AMD Ryzen 7 3700X 8-Core 3600MHz

- RAM: 32 GB (17 GB available)

- GPU: MSI AMD Radeon RX 570 ARMOR 8 GB

CPU average time for 512 x 512 was about 5~6 minutes.

With GPU and the simple script above it was reduced to 4 minutes (just 1 run).

And with your more optimized script here above my first run says 2 minutes and 10 seconds.

With CPU it was using about 16GB of the available RAM and CPU usage was about 50%.

With GPU it is using all of the 8GB available and 100% of GPU processing power.

Dan

Sat, Sep 17, 2022, 20:24:27Had a bit of difficulty putting my Token on the command line. Not sure why Ctrl-V wouldn't work, but a single right click and enter worked.

Luxion

Sat, Sep 17, 2022, 23:23:28His second script is not 'more optimized', it simply makes some variables which are then used internally to adjust the settings. The reason you are generating twice as fast is because in his second script he specified the number of steps to 25 - which when not specified it defaults back to 50.

I'm assuming you don't know what steps are nor their importance because you didn't worry about them at all before running the script. In which case I recommend you read/watch some SD tutorials and learn more.

Gianluca

Sun, Sep 18, 2022, 08:39:04Of course you are right, I noticed the difference in steps just after I posted my comment here (but I could not edit), and I was a little bit sad to acknowledge that my graphic card is not so good at the end :)

Basically for me it works as in your own experience: just slightly better than with CPU alone, perhaps just 0.3~0.5 s/it faster.

ekkko

Sun, Sep 18, 2022, 15:50:36Luxion

Sun, Sep 18, 2022, 16:02:53I still see some torch calls in there - not sure it really works but if it does I hope you could update this guide and teach us how to use them when they get merged.

Thanks again for the guide! Its brilliant!

@Gianluca

I have the MSI RX560 4G so I know how you feel.

But there's lots of good news:

- GPU's pricing should drop a little in the near future

- ONNX is still improving and its SD models and pipelines will become much better in the very near future

- AMD is apparently working with StabilityAI to help compatibility issues

- And finally within 2 years SD will become so optimized that it will be able to run on mobile - according to Emad - SD's creator!

ekkko

Sun, Sep 18, 2022, 16:02:55Dan

Sun, Sep 18, 2022, 20:44:18ponut64

Mon, Sep 19, 2022, 00:03:10Yes, you can easily do that with a Python "while" loop (which, here, is being used like a 'for' loop would in other languages).

Here is an example (note the TABS!):

num_images = 0;

while (num_images < 10):

num_images = num_images + 1

image = pipe(prompt, height=448, width=320, guidance_scale=12, num_inference_steps=60).images[0]

image.save("output" + str(num_images) + ".png")

The count of images you want out of the program is the number that "num_images" is to be less than.

Robin

Mon, Sep 19, 2022, 11:14:28Mads

Mon, Sep 19, 2022, 16:12:59A question. How do I convert custom ckpt model instead the one provided from hugging face?

Bellic

Mon, Sep 19, 2022, 18:29:54Again. Thank you so much.

Allan

Tue, Sep 20, 2022, 00:20:49Magnus

Tue, Sep 20, 2022, 09:09:16Can you do reinforcement learning on windows 10 too? referring to the pony art, was that model trained using this setup or downloaded from elsewhere? i'm guessing the ladder...

I also notice the generation uses all of my vram (8GB) does that mean it has to use some of the regular ram and slowing it down as a process?

i will try to generate in smaller resoultion but are there other ways of reducing vram usage?

Thanks

anonymous

Tue, Sep 20, 2022, 09:46:29...virtualenv\lib\site-packages\onnxruntime\capi\onnxruntime_inference_collection.py:54: UserWarning: Specified provider 'DmlExecutionProvider' is not in available provider names.Available providers: 'CPUExecutionProvider'

If anybody knows what this issue is, how to resolve it, or if you can tell me how I can go about debugging this, then I would be very grateful. I've been trying to print a list of available providers as my debug starting point to no avail.

michael

Tue, Sep 20, 2022, 10:44:00please explain in mroe detail the convert python part.

hugh

Tue, Sep 20, 2022, 10:44:04Got any ideas on iterating the seed in your loop? Omitting latents=latents from pipe yields irreproducable results.

I tried this:

seed = 50033

while (num_images < 25):

num_images = num_images + 1

seed = seed + 1

image = pipe(prompt, height=512, width=512, guidance_scale=13, num_inference_steps=25).images[0]

image.save("output"+ str(num_images) + "_seed-" + str(seed) + ".png")

and received 25 different images, but running again without changes gives 25 new images.

Replacing with:

image = pipe(prompt, num_inference_steps=13, guidance_scale=13, latents=latents).images[0]

gives reproducible results, but the seed is unchanging throughout.

michael

Tue, Sep 20, 2022, 10:57:45Neil

Tue, Sep 20, 2022, 11:40:04Don't have a good out-of-the-box answer for you, but I know a few people have had some success using Gradio to throw together a rudimentary UI. Might be something you could experiment with.

-Mads-

Not sure! I imagine that the convert_stable_diffusion_checkpoint_to_onnx.py script has some clues. I haven't taken a close look at it myself, but you might be able to repurpose whatever it does to point at a local CKPT.

-Magnus-

Not sure! I'm more a casual user than an ML guru. The pony was generated using someone else's custom-built model based on Stable Diffusion that they haven't released yet, not generated locally.

As to reducing VRAM usage, not as far as I know--I think SD uses everything that's available. There's probably some way to tune it, but I don't know what that might be.

-anonymous-

That indicates that SD can't find everything it needs to run DirectML, so it's falling back to executing on the CPU, and you're not getting any advantage from running on your GPU.

When you installed the nightly Onnx Runtime package, did you make sure to pass it the --force-reinstall flag? I noticed I had similar failures until I did so.

Neil

Tue, Sep 20, 2022, 11:49:50"...yields irreproducible results..."

Of course, you're not actually using the seed in your example! The latents are the source of randomness in the pipeline, and if you don't pass in your own, you give the pipeline free reign to generate them for you, which it will do so randomly. If you want deterministic results, you need to generate your own latents, using a seed you control.

matasoy

Tue, Sep 20, 2022, 13:45:09michael

Tue, Sep 20, 2022, 14:16:38please.

Tue, Sep 20, 2022, 14:38:22+ ~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : NotSpecified: ( File "C:\jissis\text2img.py", line 8:String) [], RemoteException

+ FullyQualifiedErrorId : NativeCommandError

num_images = num_images + 1

^

IndentationError: expected an indented block after 'while' statement on line 7

i want to roll on mor images. i dont know what to change anymore

please, btw

Tue, Sep 20, 2022, 14:40:16while (num_images < 10):

num_images = num_images + 1

image = pipe(prompt, height=448, width=320, guidance_scale=12, num_inference_steps=60).images[0]

image.save("output" + str(num_images) + ".png")

what here needs to be changed so it generates FIVE images? ._.

Luxion

Tue, Sep 20, 2022, 15:29:18>what here needs to be changed so it generates FIVE images? ._.

I would advise you try to understand the code before anything else. Its fairly easy even if you know nothing of python or coding in general.

Take a look, you first create a variable and named it "num_images" and said that it is a number and the number is 0.

Then you created a loop that will repeat all of the code inside of it for as long as its condition is True, and the condition is "num_images < 10". Then right at the start of the loop, you have added 1 to "num_images" and you do so on every loop.

Since "num_images" increases by 1 every time it loops and the loop itself will terminate at the end of the coding sequence when it reaches 10, guess how many times it will repeat itself and also guess what do you need to change in order to loop for a specific number of times of your choice...

Be sure to add an extra tab on each line of code inside the loop otherwise python wont recognize that code as being inside of the loop. If necessary, check online for examples of 'while loops in python'.

Josh

Thu, Sep 22, 2022, 02:18:53raise ValueError(f"Unexpected latents shape, got {latents.shape}, expected {latents_shape}")

ValueError: Unexpected latents shape, got (1, 4, 128, 128), expected (1, 4, 64, 64) "

Neil

Thu, Sep 22, 2022, 09:25:51The current state of the Onnx pipeline doesn't support sizes other than 512x512.

Someone

Fri, Sep 23, 2022, 05:30:04Thanks for this wonderful tutorial. I was able to make it work but it is not using the GPU atm.

I am getting following warning when running text2img.py:

C:\stbdiff\virtualenv\lib\site-packages\onnxruntime\capi\onnxruntime_inference_collection.py:54: UserWarning: Specified provider 'DmlExecutionProvider' is not in available provider names.Available providers: 'CPUExecutionProvider'

Seems like GPUexecution is not available. Any idea how to fix this one.

Thanks again.

Gato Guff

Sat, Sep 24, 2022, 18:59:33Leon

Sun, Sep 25, 2022, 01:16:01I don't know what I did wrong to be honest.

Chris

Sun, Sep 25, 2022, 10:51:24(virtualenv) (base) PS F:\amd-diffuser> python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

Traceback (most recent call last):

File "F:\amd-diffuser\convert_stable_diffusion_checkpoint_to_onnx.py", line 23, in

import onnx

ModuleNotFoundError: No module named 'onnx'

I also amended the command to the specify the output folder and it also fails :

virtualenv) (base) PS F:\amd-diffuser> py convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="F:\amd-diffuser\stable_diffusion_onnx"

Traceback (most recent call last):

File "F:\amd-diffuser\convert_stable_diffusion_checkpoint_to_onnx.py", line 23, in

import onnx

ModuleNotFoundError: No module named 'onnx'

Can anyone tell me what this error means and how to fix it?

Chris

Sun, Sep 25, 2022, 11:20:52(virtualenv) (base) PS F:\amd-diffuser> py convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

Traceback (most recent call last):

File "F:\amd-diffuser\convert_stable_diffusion_checkpoint_to_onnx.py", line 20, in

import torch

ModuleNotFoundError: No module named 'torch'

Chris

Sun, Sep 25, 2022, 13:13:16Thanks for creating the guide it took me awhile to get it working but I am now able to create images with my 7 year old AMD R9 390 8GB card in around 5 minutes.

quickwick

Sun, Sep 25, 2022, 21:39:03I've been playing with this for the past few days. I ended up hacking together a basic Tkinter-based GUI to make experimentation faster/easier. Hopefully other people find it useful. https://github.com/quickwick/stable-diffusion-win-amd-ui

Luxion

Sun, Sep 25, 2022, 21:52:44Just something to keep in mind - it shouldn't take long for diffusers to add img2img and inpaint ONNX support. The PR is almost ready to be merged just needs some touches and verification. I tried it and it works.

Somewhat unrelated to UI - one thing that I'm still trying to figure out is how to convert+load custom finetuned models such as WaifuDiffusion with ONNX as well as how to load textual inversion embeddings... Not sure its even possbile atm. If someone knows something about this please share some insights.

frosty

Mon, Sep 26, 2022, 16:27:27python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="hakurei/waifu-diffusion" --output_path="./stable_diffusion_onnx"

however this didn't work out of the box since I also had to make some slight tweaks inside the diffusers package to get it to run.

\virtualenv\Lib\site-packages\diffusers\schedulers\scheduling_ddim.py line line 210:

pred_original_sample = (torch.FloatTensor(sample) - beta_prod_t ** (0.5) * torch.FloatTensor(model_output)) / alpha_prod_t ** (0.5)

virtualenv\Lib\site-packages\diffusers\pipelines\stable_diffusion\pipeline_stable_diffusion_onnx.py line 133:

sample=latent_model_input, timestep=np.array([t], dtype=np.int64), encoder_hidden_states=text_embeddings

obviously its a very hacky approach modifying the packages and I'm screwed if I want to update, but it works :-)

Luxion

Mon, Sep 26, 2022, 18:48:37Thanks!

Yesterday I too managed to convert it (the output i used was --output_path="./waifu_diffusion_onnx") but when trying it out it was giving me weird results. First odd thing was when trying to run 10 steps (or lower) it would run 14 instead, and though I haven't tried the txt2img in-depth, the img2img (that I got from one of the diffusers PR's and is working fine for the default SD model) was totally off. The more steps I added the more noisy the image would get. I found some info in here (https://huggingface.co/hakurei/waifu-diffusion/discussions/4) that changing the scheduler in the in a json file will make it work but it didn't. I then noticed that scheduler is being used on other files as well so maybe it will work if I change it in all of them - still need to experiment with that. Hopefully I can get it to work without the need to change any package and if not, then so be it - it doesn't bother me but it isn't a good approach for the average user.

Thank you again for your help.

Mark

Tue, Sep 27, 2022, 22:37:05Could you expand on this a bit? Which PR are you referring to? I'd like to give it a go myself.

Luxion

Tue, Sep 27, 2022, 23:08:06Check the "files changed" - it adds 2 new .py files which are the img2img and inpaint pipelines and then changes other files as well in order to make them work. Basically you just need to go to ...\virtualenv\Lib\site-packages\diffusers\pipelines\stable_diffusion\ create those 2 files in there then manually add the respective changes in the other files.

Now - because the pipelines are not finished yet - you have to do these following 2 steps:

Go to https://huggingface.co/CompVis/stable-diffusion-v1-4/tree/main and download the 'vae' folder and put it inside the "stable_diffusion_onnx" folder.

Then still inside "stable_diffusion_onnx", open up "model_index.json" and add:

"vae": [

"diffusers",

"AutoencoderKL"

]

Don't forget to add a comma after " ] " before " "vae", so the file should end like this:

"vae_decoder": [

"diffusers",

"OnnxRuntimeModel"

],

"vae": [

"diffusers",

"AutoencoderKL"

]

}

Basically the pipelines will be using the default SD vae instead of ONNX until someone gets it working for that.

Finally, make a script to run them similar to the txt2img one but using the arguments required for the new ones (I've only tested img2img so far). This is the most simple img2img.py script:

from diffusers import StableDiffusionImg2ImgOnnxPipeline

from PIL import Image

baseImage = Image.open(r"cube.jpg").convert("RGB") # opens an image directly from the script's location and converts to RGB color profile

baseImage = baseImage.resize((512,512))

prompt = "an orange cube made of oranges"

denoiseStrength = 0.8 # a float number from 0 to 1 - decreasing this number will increase result similarity with baseImage

steps = 20

scale = 7.5

pipe = StableDiffusionImg2ImgOnnxPipeline.from_pretrained("./stable_diffusion_onnx", provider="DmlExecutionProvider")

image = pipe(prompt, init_image=baseImage, strength=denoiseStrength, num_inference_steps=steps, guidance_scale=scale).images[0]

image.save("output.png")

--------------------

@frosty

I managed to get waifu diffusion to work with 2 minor changes in 2 "waifu-diffusion" files before converting but using the default scheduler was absolutely horrible. Ended up doing as you said and seems to be working just fine. Only had to modify 1 line in the img2img pipeline to make it work with DDIM and that was it.

Thank you again! I'm at this very moment trying to figure out how to load textual embeddings, if by any chance you (or anybody else) knows how to please tell me!

Lino

Thu, Sep 29, 2022, 20:10:56fuzz

Fri, Sep 30, 2022, 01:12:18usage: convert_stable_diffusion_checkpoint_to_onnx.py [-h] --model_path MODEL_PATH --output_path OUTPUT_PATH

[--opset OPSET]

convert_stable_diffusion_checkpoint_to_onnx.py: error: the following arguments are required: --output_path

no matter what i put for the output path i get this error. what am i doing wrong??

frosty

Fri, Sep 30, 2022, 11:19:23the thing im struggling with now is if/how i can get it to work with bigger or different images, anything other than 512*512 either gives me an error or garbled images

FirstTimePython

Fri, Sep 30, 2022, 12:00:57Marc-André

Fri, Sep 30, 2022, 17:54:17S

Fri, Sep 30, 2022, 19:21:43Token:

Traceback (most recent call last):

File "C:\Python310\lib\runpy.py", line 196, in _run_module_as_main

return _run_code(code, main_globals, None,

File "C:\Python310\lib\runpy.py", line 86, in _run_code

exec(code, run_globals)

File "C:\Python310\Scripts\huggingface-cli.exe\__main__.py", line 7, in

File "C:\Python310\lib\site-packages\huggingface_hub\commands\huggingface_cli.py", line 45, in main

service.run()

File "C:\Python310\lib\site-packages\huggingface_hub\commands\user.py", line 149, in run

_login(self._api, token=token)

File "C:\Python310\lib\site-packages\huggingface_hub\commands\user.py", line 319, in _login

raise ValueError("Invalid token passed!")

ValueError: Invalid token passed!

Mark

Fri, Sep 30, 2022, 20:04:30Ctrl-V won't paste from your clipboard; right-click the empty space after the "Token: " prompt to paste (nothing will visibly change, but it will paste—I promise). Then press enter.

Alternatively, modify 'user.py'

...\lib\site-packages\huggingface_hub\commands\user.py

Open the script, and look for:

token=getpass("Token:")

_login(self._apy. token=token)

Change to:

token='yourtokenhere'#getpass("Token:")

_login(self._apy. token=token)

Save the file, run the 'huggingface-cli login' command again.

FluffRat

Sat, Oct 01, 2022, 13:52:23Pula

Sat, Oct 01, 2022, 19:17:08The second was you need to 'pip install onnx', or you'll run into module not found when executing the 'convert_stable_diffusion_checkpoint_to_onnx.py'. I'm still working on trying to install the files, my interwebs connection apparently sucks today.

MSC

Sat, Oct 01, 2022, 21:39:33I attempt the ort_nightly install stage and get this.

(virtualenv) PS C:\stable-diffusion> pip install ort_nightly_directml-1.13.0.dev20220908001-cp310-cp310-win_amd64 --force-reinstall

>>

ERROR: Could not find a version that satisfies the requirement ort_nightly_directml-1.13.0.dev20220908001-cp310-cp310-win_amd64 (from versions: none)

ERROR: No matching distribution found for ort_nightly_directml-1.13.0.dev20220908001-cp310-cp310-win_amd64

FluffRat

Sun, Oct 02, 2022, 14:26:31Lapo

Sun, Oct 02, 2022, 14:43:58(virtualenv) PS D:\stable-diffusion\stable_diffusion_onnx> python .\text2img.py

Traceback (most recent call last):

File "D:\stable-diffusion\stable_diffusion_onnx\text2img.py", line 12, in

pipe = StableDiffusionOnnxPipeline.from_pretrained("./stable_diffusion_onnx", provider="DmlExecutionProvider")

File "D:\stable-diffusion\virtualenv\lib\site-packages\diffusers\pipeline_utils.py", line 288, in from_pretrained

cached_folder = snapshot_download(

File "D:\stable-diffusion\virtualenv\lib\site-packages\huggingface_hub\utils\_deprecation.py", line 98, in inner_f

return f(*args, **kwargs)

File "D:\stable-diffusion\virtualenv\lib\site-packages\huggingface_hub\utils\_validators.py", line 92, in _inner_fn

validate_repo_id(arg_value)

File "D:\stable-diffusion\virtualenv\lib\site-packages\huggingface_hub\utils\_validators.py", line 142, in validate_repo_id

raise HFValidationError(

huggingface_hub.utils._validators.HFValidationError: Repo id must use alphanumeric chars or '-', '_', '.', '--' and '..' are forbidden, '-' and '.' cannot start or end the name, max length is 96: './stable_diffusion_onnx'.

Does anyone know what this might be caused by?

SovietYeet

Sun, Oct 02, 2022, 19:15:33Frank

Sun, Oct 02, 2022, 20:08:21python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="C:\Users\KieraPC\Desktop\stable-diffusion\stable_diffusion_onnx"

and the actual address of the virtual environment is located in 'C:\Users\KieraBooper\Desktop\stable-diffusion'. I also have a text document 'convert_stable_diffusion_checkpoint_to_onnx.py' with the contents of that file on githubusercontent in both the 'stable-diffusion' folder and in the virtual environment. What am I doing wrong? Please treat me like a peasant and spell it out for me

Frank

Sun, Oct 02, 2022, 20:12:11Frank

Sun, Oct 02, 2022, 20:26:47'Login successful

Your token has been saved to C:\Users\KieraPC\.huggingface\token'

This is so hard it's mind blowing

Shamu

Sun, Oct 02, 2022, 21:23:36A. Dumas

Sun, Oct 02, 2022, 21:39:22FluffRat

Mon, Oct 03, 2022, 00:28:37python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

For reference, I ran that from powershell not cmd. I'm not sure if the slash needs to be flipped for cmd shell.

Devon

Mon, Oct 03, 2022, 09:30:51I just wanted to ask real quick, after inputting the script to grab stable diffusion, should the prompt window be throwing out endless Warnings going on about shape inferences and constant folding steps?

Cheers!

Cloudy

Mon, Oct 03, 2022, 11:54:51I've altered your script to run a loop over 200 seed numbers (50,000 - 50,199) for a successful prompt, here are my favorite results:

https://imgur.com/a/S02vUMy/layout/grid

Mike

Mon, Oct 03, 2022, 15:49:38Luxion

Mon, Oct 03, 2022, 23:21:24I'm short on time so I will summarize.

You need to find (on diffusers github), download and place the 'convert_original_stable_diffusion_to_diffusers.py' at the same location where you have the other convert script. Place the checkpoint in there as well as run the script like this:

python convert_original_stable_diffusion_to_diffusers.py --checkpoint_path="./pokemon-ema-only-epoch-000142.ckpt" --dump_path="./pokemon-ema-only-epoch-000142"

This will convert a checkpoint to diffusers but you then need to convert it to ONNX.

Run convert_stable_diffusion_checkpoint_to_onnx.py like so:

python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="./pokemon-ema-only-epoch-000142" --output_path="./pokemon-ema-only-epoch-000142_onnx"

You can now delete the diffusers generated folder and just keep the onnx one.

You probably will need to use the DDIM with that model so look up part 2 of this tutorial which explains how to do so.

Here is how to use it in txt2img script:

...

modelName = "pokemon-ema-only-epoch-000142_onnx"

ddim = DDIMScheduler(beta_start=0.00085, beta_end=0.012, beta_schedule="scaled_linear", num_train_timesteps=1000, clip_sample=False, set_alpha_to_one=False, tensor_format="np")

pipe = StableDiffusionOnnxPipeline.from_pretrained("./" + modelName, provider="DmlExecutionProvider", scheduler=ddim)

...

Luxion

Tue, Oct 04, 2022, 09:08:35One thing I forgot to mention, you might need to make a change in the DDIM scheduler itself perhaps even prior to model conversion. If so, just look into frosty's comments in this page - he explains how to do it.

FluffRat

Tue, Oct 04, 2022, 11:17:05ananab

Wed, Oct 05, 2022, 13:55:37File "C:\Users\user\AppData\Local\Programs\Python\Python310\lib\runpy.py", line 196, in _run_module_as_main

return _run_code(code, main_globals, None,

File "C:\Users\user\AppData\Local\Programs\Python\Python310\lib\runpy.py", line 86, in _run_code

exec(code, run_globals)

File "C:\Programmi\AI\stable_diffusion_amd\amd_venv\Scripts\huggingface-cli.exe\__main__.py", line 7, in

File "C:\Programmi\AI\stable_diffusion_amd\amd_venv\lib\site-packages\huggingface_hub\commands\huggingface_cli.py", line 45, in main

service.run()

File "C:\Programmi\AI\stable_diffusion_amd\amd_venv\lib\site-packages\huggingface_hub\commands\user.py", line 149, in run

_login(self._api, token=token)

File "C:\Programmi\AI\stable_diffusion_amd\amd_venv\lib\site-packages\huggingface_hub\commands\user.py", line 320, in _login

hf_api.set_access_token(token)

File "C:\Programmi\AI\stable_diffusion_amd\amd_venv\lib\site-packages\huggingface_hub\hf_api.py", line 719, in set_access_token

write_to_credential_store(USERNAME_PLACEHOLDER, access_token)

File "C:\Programmi\AI\stable_diffusion_amd\amd_venv\lib\site-packages\huggingface_hub\hf_api.py", line 588, in write_to_credential_store

with subprocess.Popen(

File "C:\Users\user\AppData\Local\Programs\Python\Python310\lib\subprocess.py", line 969, in __init__

self._execute_child(args, executable, preexec_fn, close_fds,

File "C:\Users\user\AppData\Local\Programs\Python\Python310\lib\subprocess.py", line 1438, in _execute_child

hp, ht, pid, tid = _winapi.CreateProcess(executable, args,

FileNotFoundError: [WinError 2] The system cannot find the file specified

i get this error...i reinstalled a non-store Python and i installed GIT too....

i tryed to edit user.py file inserting the line token = 'MY TOKEN'#getpass("Token: ")...but it continues giving me this problem....

Harish Anand

Fri, Oct 07, 2022, 18:39:02Edgar

Sat, Oct 08, 2022, 10:36:04Edgar

Sat, Oct 08, 2022, 10:38:44pip install huggingface_hub

python -c "from huggingface_hub.hf_api import HfFolder; HfFolder.save_token('MY_HUGGINGFACE_TOKEN_HERE')"

Ben Johnson

Sat, Oct 08, 2022, 13:52:45I am at this command, pip install ./ort_nightly_directml-1.13.0.dev20220913011-cp37-cp37m-win_amd64

When I run it however I am met with 'invalid requirement' ? I've tried downloading different versions of the directml, and upgrade pip but none seem to work

Luxion

Sat, Oct 08, 2022, 14:58:40That version of python might work but its not the latest. You should probably be using 3.10.X just to be safe.

As for the reason why you are failing is probably because you are using the wrong command.

'pip install' is often used to retrieve libraries from the internet but when you specify a local file it will try to install that instead. What you are specifying is a folder which probably doesn't exist and not the file - you need to add .whl at the end like so:

pip install ./ort_nightly_directml-1.13.0.dev20220913011-cp37-cp37m-win_amd64.whl

Ornithopter

Sat, Oct 08, 2022, 15:58:58They offered a solution on Linux with AMD GPUs, but I need it on Windows.

So how can I get Windows + AMD GPUs + WebUI?

theGuy

Mon, Oct 10, 2022, 06:14:19Adrian

Mon, Oct 10, 2022, 13:54:50While I'm trying to download the onnx-ified file of the model the program decides to stop and It raises a time out error.

Does anyone know how should I fixed? When I test my internet connectionts It doens't seems to be any problem.

requests.exceptions.ConnectionError: HTTPSConnectionPool(host='cdn-lfs.huggingface.co', port=443): Read timed out.

BlueJay

Tue, Oct 11, 2022, 01:03:56Adrian

Tue, Oct 11, 2022, 19:17:47BlueJay

Tue, Oct 11, 2022, 22:28:17489

Wed, Oct 12, 2022, 08:50:09_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

Traceback (most recent call last):

File "convert_stable_diffusion_checkpoint_to_onnx.py", line 24, in

from diffusers import StableDiffusionOnnxPipeline, StableDiffusionPipeline

File "C:\SD\virtualenv\lib\site-packages\diffusers\__init__.py", line 15, in <

module>

from .onnx_utils import OnnxRuntimeModel

File "C:\SD\virtualenv\lib\site-packages\diffusers\onnx_utils.py", line 31, in

import onnxruntime as ort

File "C:\SD\virtualenv\lib\site-packages\onnxruntime\__init__.py", line 55, in

raise import_capi_exception

File "C:\SD\virtualenv\lib\site-packages\onnxruntime\__init__.py", line 23, in

from onnxruntime.capi._pybind_state import (

File "C:\SD\virtualenv\lib\site-packages\onnxruntime\capi\_pybind_state.py", l

ine 33, in

from .onnxruntime_pybind11_state import * # noqa

ImportError: DLL load failed: 指定されたモジュールが見つかりません。

I am plagued with errors.

I am unable to retrieve the model with the above message.

Can someone please give me some hints?

i axe u a question

Wed, Oct 12, 2022, 13:38:13LErikson

Wed, Oct 12, 2022, 15:36:52nice work, tried different manuals to get it to work on my desktop with AMD.

I have the feeling the art style is stucked, no change in the artstyle if I change the prompt part 'art by ...' - anyone else have that problem? (or anyone have a solution? ;))

LErikson

Wed, Oct 12, 2022, 15:37:59nice work, tried different manuals to get it to work on my desktop with AMD. Until I found your site and it was the solution, thank you!

1312

Wed, Oct 12, 2022, 18:20:32(virtualenv) (base) C:\Users\1312>python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

D:\miniconda3\python.exe: can't open file 'C:\Users\1312\convert_stable_diffusion_checkpoint_to_onnx.py': [Errno 2] No such file or directory

1312

Wed, Oct 12, 2022, 18:40:09Traceback (most recent call last):

File "C:\Users\1312\convert_stable_diffusion_checkpoint_to_onnx.py", line 23, in

import onnx

ModuleNotFoundError: No module named 'onnx'

489

Wed, Oct 12, 2022, 18:42:10Hi, 1312

Try installing onnx!

1312

Wed, Oct 12, 2022, 18:44:13Traceback (most recent call last):

File "C:\Users\1312\stable-diffusion\convert_stable_diffusion_checkpoint_to_onnx.py", line 20, in

import torch

ModuleNotFoundError: No module named 'torch'

Sorry, i ain't no coding master and i have no clue what i am doing :(

1312

Wed, Oct 12, 2022, 18:51:05OSError: There was a specific connection error when trying to load CompVis/stable-diffusion-v1-4:

(Request ID: jJWdiKecAfEcU9TsGlEU5)

Luxion

Wed, Oct 12, 2022, 23:23:26https://pastebin.com/1mF6zdvc

0Point

Fri, Oct 14, 2022, 05:23:42Do you have any clue about replacing the vae in the diffuser with another vae.pt file? (or somehow convert the .pt to .bin file?)

I've tried a lot of approaches, but all end up failed. ):

Luxion

Fri, Oct 14, 2022, 15:01:55Usually the .ckpt file has the VAE integrated within it so when you run the "convert_original_stable_diffusion_to_diffusers.py" script (which you can get in the scripts folder in the diffusers github) it will try to load the VAE from there to then convert to diffusers but since there is no VAE it will return an error. The way to solve this is to edit the script so you can point it to the external VAE instead.

Here is a pastebin link for my edited script:

https://pastebin.com/skwwbPpw

You can use it by following the instructions at the top or just get some insights from it and edit your own. Don't forget you then need to convert it to ONNX with the convert to ONNX script.

Chris

Fri, Oct 14, 2022, 16:27:07Here is my test2img.py

from diffusers import StableDiffusionOnnxPipeline

pipe = StableDiffusionOnnxPipeline.from_pretrained("./stable_diffusion_onnx", provider="DmlExecutionProvider")

pipe.safety_checker = lambda images, **kwargs: (images, [False] * len(images))

prompt = "A happy dog'"

image = pipe(prompt).images[0]

image.save("output.png")

When I run python .\text2img.py

I got this:

(virtualenv) PS H:\stable-diffusion> python .\text2img.py

2022-10-14 09:03:13.5408074 [W:onnxruntime:, inference_session.cc:492 onnxruntime::InferenceSession::RegisterExecutionProvider] Having memory pattern enabled is not supported while using the DML Execution Provider. So disabling it for this session since it uses the DML Execution Provider.

2022-10-14 09:03:13.8936722 [W:onnxruntime:, session_state.cc:1030 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Some nodes were not assigned to the preferred execution providers which may or may not have an negative impact on performance. e.g. ORT explicitly assigns shape related ops to CPU to improve perf.

2022-10-14 09:03:13.9006160 [W:onnxruntime:, session_state.cc:1032 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Rerunning with verbose output on a non-minimal build will show node assignments.

2022-10-14 09:03:14.9648983 [W:onnxruntime:, inference_session.cc:492 onnxruntime::InferenceSession::RegisterExecutionProvider] Having memory pattern enabled is not supported while using the DML Execution Provider. So disabling it for this session since it uses the DML Execution Provider.

2022-10-14 09:03:15.0303245 [W:onnxruntime:, session_state.cc:1030 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Some nodes were not assigned to the preferred execution providers which may or may not have an negative impact on performance. e.g. ORT explicitly assigns shape related ops to CPU to improve perf.

2022-10-14 09:03:15.0370503 [W:onnxruntime:, session_state.cc:1032 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Rerunning with verbose output on a non-minimal build will show node assignments.

2022-10-14 09:03:15.5524993 [W:onnxruntime:, inference_session.cc:492 onnxruntime::InferenceSession::RegisterExecutionProvider] Having memory pattern enabled is not supported while using the DML Execution Provider. So disabling it for this session since it uses the DML Execution Provider.

2022-10-14 09:03:15.7286590 [W:onnxruntime:, session_state.cc:1030 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Some nodes were not assigned to the preferred execution providers which may or may not have an negative impact on performance. e.g. ORT explicitly assigns shape related ops to CPU to improve perf.

2022-10-14 09:03:15.7357294 [W:onnxruntime:, session_state.cc:1032 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Rerunning with verbose output on a non-minimal build will show node assignments.

2022-10-14 09:03:16.4002876 [W:onnxruntime:, inference_session.cc:492 onnxruntime::InferenceSession::RegisterExecutionProvider] Having memory pattern enabled is not supported while using the DML Execution Provider. So disabling it for this session since it uses the DML Execution Provider.

2022-10-14 09:03:17.9623164 [W:onnxruntime:, session_state.cc:1030 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Some nodes were not assigned to the preferred execution providers which may or may not have an negative impact on performance. e.g. ORT explicitly assigns shape related ops to CPU to improve perf.

2022-10-14 09:03:17.9691546 [W:onnxruntime:, session_state.cc:1032 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Rerunning with verbose output on a non-minimal build will show node assignments.

ftfy or spacy is not installed using BERT BasicTokenizer instead of ftfy.

100%|██████████████████████████████████████████████████████████████████████████████████| 51/51 [03:09<00:00, 3.72s/it]

Can you tell me what it means? And how can I fix it?

Philoc

Sun, Oct 16, 2022, 00:22:14AdX

Sun, Oct 16, 2022, 02:45:14Star Wars

Sun, Oct 16, 2022, 02:47:43stasisfield

Sun, Oct 16, 2022, 04:47:44try to run that script to convert models but keep error

Traceback (most recent call last):

File "D:\aaa\convert_original_stable_diffusion_to_diffusers.py", line 688, in

checkpoint = torch.load(args.checkpoint_path, map_location="cpu")["state_dict"] # Luxion edited - added map_location=torch.device("cpu") -- because on AMD gpu's loading into the GPU will probably cause errors

KeyError: 'state_dict'

Magnum

Sun, Oct 16, 2022, 06:07:44Magnum

Sun, Oct 16, 2022, 06:07:44Luxion

Sun, Oct 16, 2022, 16:42:48Thats because you are not trying to convert a fine-tuned model using the original SD base format - for reference, all custom fine-tuned models released out there (that I've seen) such as WAIFU - all of them follow the original SD format. So it makes me wonder if what you are trying to convert is a real fine-tuned model or something else entirely.

You can even use this script with dreambooth .ckpt's it works just fine.

Normal .ckpt's have a built-in dictionary called 'state_dict' - which is what is necessary to load before conversion - it is my understanding that the keys and values of that dictionary are the weights of the model itself.

Make sure what you are trying to convert is a real fine-tuned model and not something else and if it is then I guess you have to make the necessary changes on your own. You can try loading the whole .ckpt by removing '["state_dict"]' then make a loop to print what is inside of it to understand what you need to change.

If you can tell me where to find the file you are trying to convert then I can take a look into it.

@Magnum

Yes. I've integrated that feature myself but haven't actually checked how well those negative tags are affecting the results. But overall it seems to be working just fine.

Check out this link: https://github.com/huggingface/diffusers/pull/549/files

The file at the bottom is the ONNX pipeline. In your own pipeline, remove the code that shows in red and add the green code. Your file is located at: ...\virtualenv\Lib\site-packages\diffusers\pipelines\stable_diffusion\

Then to use it you need to add some string containing the negative tags and pass it as an argument like so:

negativePrompt = "lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry"

...

image = pipe([Your Other Args Here], negative_prompt=negativePrompt).images[0]

Magnum

Sun, Oct 16, 2022, 23:38:35Oh wow, that worked like a charm. My results are orders of magnitude better and people don't have 3 legs anymore.

Richard

Sun, Oct 16, 2022, 23:48:59```

2022-10-16 16:46:29.2725618 [W:onnxruntime:, inference_session.cc:490 onnxruntime::InferenceSession::RegisterExecutionProvider] Having memory pattern enabled is not supported while using the DML Execution Provider. So disabling it for this session since it uses the DML Execution Provider.

2022-10-16 16:46:35.0815525 [E:onnxruntime:, inference_session.cc:1484 onnxruntime::InferenceSession::Initialize::::operator ()] Exception during initialization: D:\a\_work\1\s\onnxruntime\core\providers\dml\DmlExecutionProvider\src\ExecutionProvider.cpp(563)\onnxruntime_pybind11_state.pyd!00007FFC4C635FC1: (caller: 00007FFC4C635D62) Exception(2) tid(375c) 8007000E Not enough memory resources are available to complete this operation.

Traceback (most recent call last):

File "C:\stable-diffusion-amd\txt2img.py", line 2, in

pipe = StableDiffusionOnnxPipeline.from_pretrained("./stable_diffusion_onnx", provider="DmlExecutionProvider")

File "C:\stable-diffusion-amd\virtualenv\lib\site-packages\diffusers\pipeline_utils.py", line 383, in from_pretrained

loaded_sub_model = load_method(os.path.join(cached_folder, name), **loading_kwargs)

File "C:\stable-diffusion-amd\virtualenv\lib\site-packages\diffusers\onnx_utils.py", line 182, in from_pretrained

return cls._from_pretrained(

File "C:\stable-diffusion-amd\virtualenv\lib\site-packages\diffusers\onnx_utils.py", line 151, in _from_pretrained

model = OnnxRuntimeModel.load_model(os.path.join(model_id, model_file_name), provider=provider)

File "C:\stable-diffusion-amd\virtualenv\lib\site-packages\diffusers\onnx_utils.py", line 68, in load_model

return ort.InferenceSession(path, providers=[provider])

File "C:\stable-diffusion-amd\virtualenv\lib\site-packages\onnxruntime\capi\onnxruntime_inference_collection.py", line 347, in __init__

self._create_inference_session(providers, provider_options, disabled_optimizers)

File "C:\stable-diffusion-amd\virtualenv\lib\site-packages\onnxruntime\capi\onnxruntime_inference_collection.py", line 395, in _create_inference_session

sess.initialize_session(providers, provider_options, disabled_optimizers)

onnxruntime.capi.onnxruntime_pybind11_state.RuntimeException: [ONNXRuntimeError] : 6 : RUNTIME_EXCEPTION : Exception during initialization: D:\a\_work\1\s\onnxruntime\core\providers\dml\DmlExecutionProvider\src\ExecutionProvider.cpp(563)\onnxruntime_pybind11_state.pyd!00007FFC4C635FC1: (caller: 00007FFC4C635D62) Exception(2) tid(375c) 8007000E Not enough memory resources are available to complete this operation.

```

Bob

Mon, Oct 17, 2022, 02:17:31Astryx204

Mon, Oct 17, 2022, 02:37:37Anyone know what to do? this issue makes this unusable

Sergey

Wed, Oct 19, 2022, 14:53:44Richard

Wed, Oct 19, 2022, 16:24:31(virtualenv) C:\Users\Richard\Desktop>python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

Traceback (most recent call last):

File "C:\Users\Richard\Desktop\convert_stable_diffusion_checkpoint_to_onnx.py", line 23, in

import onnx

ModuleNotFoundError: No module named 'onnx'

How to fix this?

Richard

Wed, Oct 19, 2022, 16:32:14and this happened:

(virtualenv) C:\Users\Richard\Desktop>python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

Traceback (most recent call last):

File "C:\Users\Richard\Desktop\convert_stable_diffusion_checkpoint_to_onnx.py", line 24, in

from diffusers import OnnxStableDiffusionPipeline, StableDiffusionPipeline

ImportError: cannot import name 'OnnxStableDiffusionPipeline' from 'diffusers' (C:\Users\Richard\Desktop\virtualenv\lib\site-packages\diffusers\__init__.py)

how to fix this issue?

Ricard

Wed, Oct 19, 2022, 16:47:19ImportError: cannot import name 'OnnxStableDiffusionPipeline' from 'diffusers'

You have to replace all instances of OnnxStableDiffusionPipeline to StableDiffusionOnnxPipeline.

Christian

Thu, Oct 20, 2022, 00:28:42File "convert_stable_diffusion_checkpoint_to_onnx.py", line 181, in convert_models

onnx_pipeline = StableDiffusionOnnxPipeline(

TypeError: __init__() got an unexpected keyword argument 'vae_encoder

Richard

Thu, Oct 20, 2022, 12:50:22Wed, Oct 19, 2022, 16:47:19

If anyone is stuck on this error:

ImportError: cannot import name 'OnnxStableDiffusionPipeline' from 'diffusers'

You have to replace all instances of OnnxStableDiffusionPipeline to StableDiffusionOnnxPipeline.

So sorry im not familiar with the coding, how do i do this?

Luxion

Thu, Oct 20, 2022, 13:53:58Long story short you probably only need to edit the convert script because the diffusers library that you downloaded is still the same as everybody else who followed this guide which is the v0.3.0. Only the convert script is different on your end because it was updated and they changed the name of the pipeline - for whatever reason.

So simply open the convert script with a text editor, do CTRL+F and replace:

OnnxStableDiffusionPipeline

with:

StableDiffusionOnnxPipeline

Then save and try again.

Luxion

Thu, Oct 20, 2022, 14:03:19I found some differences in the script that might cause problems so to make things simpler for you guys I posted my v0.3.0 script on pastebin:

https://pastebin.com/15HcfTXZ

Replace the content of your script with that code and it should work.

@Neil

The convert script is outdated and no longer works for this tutorial, plus they have finally released the img2img and inpaint pipelines.

We still don't have a UI but now might be a good time to make a new guide - if you can :D

Nathan

Thu, Oct 20, 2022, 14:46:11I tried to run: py convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"\

After a TON of warnings, I ended up with the same error as Christian:

Traceback (most recent call last):

File "C:\Stable Diffusion\convert_stable_diffusion_checkpoint_to_onnx.py", line 227, in

convert_models(args.model_path, args.output_path, args.opset)

File "C:\Stable Diffusion\virtualenv\lib\site-packages\torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "C:\Stable Diffusion\convert_stable_diffusion_checkpoint_to_onnx.py", line 186, in convert_models

onnx_pipeline = StableDiffusionOnnxPipeline(

TypeError: StableDiffusionOnnxPipeline.__init__() got an unexpected keyword argument 'vae_encoder'

Here's a paste of all of the errors: https://pastebin.com/seKs5dX4

How can I fix it?

Kuojin

Fri, Oct 21, 2022, 01:05:59Kuojin

Fri, Oct 21, 2022, 02:02:24Traceback (most recent call last):

File "C:\Users\Brian\Documents\stablediffusion\convert_stable_diffusion_checkpoint_to_onnx.py", line 24, in

from diffusers import OnnxStableDiffusionPipeline, StableDiffusionPipeline

ImportError: cannot import name 'OnnxStableDiffusionPipeline' from 'diffusers' (C:\users\brian\Documents\stablediffusion\virtualenv\lib\site-packages\diffusers\__init__.py)

No idea what is causing this one, any help would be appreciated. Thanks :)

Kuojin

Fri, Oct 21, 2022, 02:03:54Kuojin

Fri, Oct 21, 2022, 02:05:59John

Fri, Oct 21, 2022, 05:06:38TeaAndBread

Fri, Oct 21, 2022, 12:18:46File "X:\Programme\DiffusionEnvironment\stable-diffusion\virtualenv\lib\site-packages\diffusers\pipelines\stable_diffusion\pipeline_onnx_stable_diffusion.py", line 218, in __init__

super().__init__(

TypeError: OnnxStableDiffusionPipeline.__init__() missing 1 required positional argument: 'vae_encoder'

Anyone know what might be the cause of this? I assumed it was a problem with the vae_encoder in the stable_diffusion_onx folder, but that seems alright, and contains a file named model (133mb), same as the onnx build on huggingface

Srini

Sun, Oct 23, 2022, 10:34:44(virtualenv) PS C:\Users\datta> python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

Traceback (most recent call last):

File "C:\Users\datta\convert_stable_diffusion_checkpoint_to_onnx.py", line 23, in

import onnx

ModuleNotFoundError: No module named 'onnx'

(virtualenv) PS C:\Users\datta>

Srini

Sun, Oct 23, 2022, 10:34:48(virtualenv) PS C:\Users\datta> python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

Traceback (most recent call last):

File "C:\Users\datta\convert_stable_diffusion_checkpoint_to_onnx.py", line 23, in

import onnx

ModuleNotFoundError: No module named 'onnx'

(virtualenv) PS C:\Users\datta>

srini

Sun, Oct 23, 2022, 10:58:52(virtualenv) PS C:\Users\datta> python convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

Traceback (most recent call last):

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\utils\_errors.py", line 213, in hf_raise_for_status

response.raise_for_status()

File "C:\Users\datta\virtualenv\lib\site-packages\requests\models.py", line 1021, in raise_for_status

raise HTTPError(http_error_msg, response=self)

requests.exceptions.HTTPError: 403 Client Error: Forbidden for url: https://huggingface.co/api/models/CompVis/stable-diffusion-v1-4/revision/main

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\datta\convert_stable_diffusion_checkpoint_to_onnx.py", line 215, in

convert_models(args.model_path, args.output_path, args.opset)

File "C:\Users\datta\virtualenv\lib\site-packages\torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "C:\Users\datta\convert_stable_diffusion_checkpoint_to_onnx.py", line 73, in convert_models

pipeline = StableDiffusionPipeline.from_pretrained(model_path, use_auth_token=True)

File "C:\Users\datta\virtualenv\lib\site-packages\diffusers\pipeline_utils.py", line 288, in from_pretrained

cached_folder = snapshot_download(

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\utils\_deprecation.py", line 98, in inner_f

return f(*args, **kwargs)

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\utils\_validators.py", line 94, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\_snapshot_download.py", line 157, in snapshot_download

repo_info = _api.repo_info(

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\utils\_validators.py", line 94, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\hf_api.py", line 1491, in repo_info

return method(

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\utils\_validators.py", line 94, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\utils\_deprecation.py", line 98, in inner_f

return f(*args, **kwargs)

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\hf_api.py", line 1289, in model_info

hf_raise_for_status(r)

File "C:\Users\datta\virtualenv\lib\site-packages\huggingface_hub\utils\_errors.py", line 254, in hf_raise_for_status

raise HfHubHTTPError(str(HTTPError), response=response) from e

huggingface_hub.utils._errors.HfHubHTTPError: (Request ID: 4O6kTK075qkZG_6Ei-bhN)

Colin

Sun, Oct 23, 2022, 17:51:03Phil Z

Mon, Oct 24, 2022, 17:57:04I can go in and download the ckpt file just fine.

Is there a script edit I can use to get that ckpt file for the conversion script?

Jade

Mon, Oct 24, 2022, 22:52:52https://i.imgur.com/ICilzYC.jpeg

//

https://justpaste.it/96442

Jade

Tue, Oct 25, 2022, 10:06:43https://justpaste.it/3zp02

Nathan

Tue, Oct 25, 2022, 15:38:512022-10-25 23:36:44.2193970 [W:onnxruntime:, inference_session.cc:492 onnxruntime::InferenceSession::RegisterExecutionProvider] Having memory pattern enabled is not supported while using the DML Execution Provider. So disabling it for this session since it uses the DML Execution Provider.

2022-10-25 23:36:45.8210854 [W:onnxruntime:, session_state.cc:1030 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Some nodes were not assigned to the preferred execution providers which may or may not have an negative impact on performance. e.g. ORT explicitly assigns shape related ops to CPU to improve perf.

2022-10-25 23:36:45.8257960 [W:onnxruntime:, session_state.cc:1032 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Rerunning with verbose output on a non-minimal build will show node assignments.

2022-10-25 23:36:53.0879966 [W:onnxruntime:, inference_session.cc:492 onnxruntime::InferenceSession::RegisterExecutionProvider] Having memory pattern enabled is not supported while using the DML Execution Provider. So disabling it for this session since it uses the DML Execution Provider.

2022-10-25 23:36:53.1552166 [W:onnxruntime:, session_state.cc:1030 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Some nodes were not assigned to the preferred execution providers which may or may not have an negative impact on performance. e.g. ORT explicitly assigns shape related ops to CPU to improve perf.

2022-10-25 23:36:53.1585294 [W:onnxruntime:, session_state.cc:1032 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Rerunning with verbose output on a non-minimal build will show node assignments.

ftfy or spacy is not installed using BERT BasicTokenizer instead of ftfy.

2022-10-25 23:36:53.9236496 [W:onnxruntime:, inference_session.cc:492 onnxruntime::InferenceSession::RegisterExecutionProvider] Having memory pattern enabled is not supported while using the DML Execution Provider. So disabling it for this session since it uses the DML Execution Provider.

2022-10-25 23:36:54.1009858 [W:onnxruntime:, session_state.cc:1030 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Some nodes were not assigned to the preferred execution providers which may or may not have an negative impact on performance. e.g. ORT explicitly assigns shape related ops to CPU to improve perf.

2022-10-25 23:36:54.1057708 [W:onnxruntime:, session_state.cc:1032 onnxruntime::VerifyEachNodeIsAssignedToAnEp] Rerunning with verbose output on a non-minimal build will show node assignments.

2022-10-25 23:36:55.5543313 [W:onnxruntime:, inference_session.cc:492 onnxruntime::InferenceSession::RegisterExecutionProvider] Having memory pattern enabled is not supported while using the DML Execution Provider. So disabling it for this session since it uses the DML Execution Provider.